CVG 1.37.0 (22-Jan-2024)

Our initial release in 2024 focuses on improving operational control and data protection. Chief among these is the retroactive anonymization of phone number feature, a mechanism that enables data export and third-party analytics by temporarily storing phone numbers in plain text format in CVG before automatically anonymizing them.

In addition, we bring you OpenAI’s exceptional Text-to-Speech (TTS) and Speech-to-Text (STT) models, renowned for their naturalness and versatility in language support. However, they come with some limitations, too.

The new Smart Dialog Assistant (Beta) for Retrieval Augmented Generation (RAG) ensures improved responses based on the knowledge available in your uploaded documents.

Deactivating the profanity filter allows a little more color in transcriptions results. Together with hints for the STT this profanity deactivation is also made available in our VIER Voice Extension for Cognigy.

By the end of this year, all bot interactions will require authentication for increased security. To facilitate this process, we have included the authToken parameter in all outgoing endpoint requests from CVG to your bot.

Various improvements in the CVG Integration for Rasa optimize system performance and reliability. Other improvements include the ability to adjust speech speed for TTS in ChatGPT Bots, Smart Dialog, and Smart Dialog Assistant and the ability to assign an external callId to dialogs initiated by outbound calls.

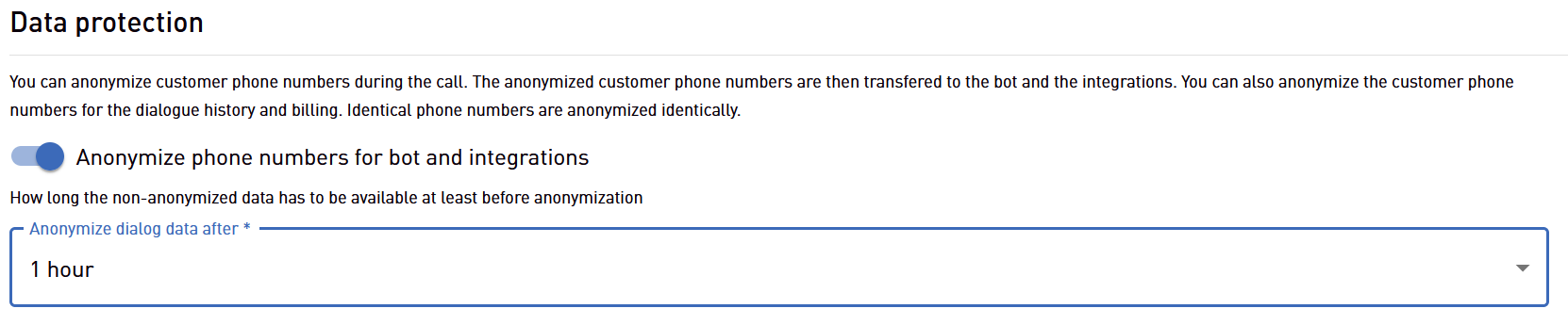

Retroactive Anonymization of Phone Numbers

Retroactive anonymization for caller telephone numbers and call destinations bolsters the privacy feature introduced in our previous release, which allowed the anonymization of phone numbers of new calls.

The new functionality enables temporary storage of telephone numbers in plain text format, thereby facilitating data export and third-party tool analysis. Users can designate a retention period post which the telephone numbers will be automatically anonymized in CVG.

The realization of this feature gives you greater flexibility with data handling while ensuring the same level of privacy protection. Upon the expiry of the stipulated retention period, only MD5 hashed, anonymized phone numbers will be visible while accessing historical data in CVG.

This feature emphasizes our commitment to driving operational control while persistently prioritizing privacy and data protection.

STT and TTS Services by OpenAI (US-hosted)

We always admire those who effectively wear multiple hats. OpenAI, besides being the mastermind behind the eloquent chatterbox ChatGPT, also has exceptional speech-to-text (STT) and text-to-speech (TTS) models under its belt.

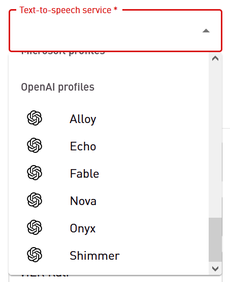

OpenAI TTS Voices

“Gift” isn’t a term we use lightly. OpenAI TTS generates enunciated speech output with the naturalness that would give Morgan Freeman a run for his money. You can almost hear the gears of deep learning algorithms working behind the curtains to create these voices.

Under the spell of their charm, we are now offering these dulcet tones as an alternative to your existing bot voices from Amazon, Google, IBM, Microsoft and Nuance.

OpenAI TTS currently offers six different voices. While these voices were born in the US, they’ve picked up an impressive range of other languages. Sometimes with a slight US accent, but in our opinion this is what makes the voices so charming.

Here is a list of all currently supported languages:

Afrikaans, Arabic, Armenian, Azerbaijani, Belarusian, Bosnian, Bulgarian, Catalan, Chinese, Croatian, Czech, Danish, Dutch, English, Estonian, Finnish, French, Galician, German, Greek, Hebrew, Hindi, Hungarian, Icelandic, Indonesian, Italian, Japanese, Kannada, Kazakh, Korean, Latvian, Lithuanian, Macedonian, Malay, Marathi, Maori, Nepali, Norwegian, Persian, Polish, Portuguese, Romanian, Russian, Serbian, Slovak, Slovenian, Spanish, Swahili, Swedish, Tagalog, Tamil, Thai, Turkish, Ukrainian, Urdu, Vietnamese, and Welsh.

The TTS voices of OpenAI have two limitations:

SSML is not supported. SSML might leave them speechless (pun intended) and result in them filling the air with, well, air.

We’re also currently only allied with models hosted by OpenAI stateside. So if any EU customers using these voices, bear in mind that the text for narration will take a transatlantic trip.

Nevertheless, our urgent recommendation: Try out the new voices from OpenAI, your bots’ conversation partners will be all ears. And we strongly assume that these new TTS voices will soon also be available from Azure in European regions.

And of course you can use the OpenAI TTS voices not only for your bots built with ChatGPT, but also for all other bots built with CVG.

OpenAI TTS not yet available in our US location

Due to some technical high jumps OpenAI TTS, is currently available only in our EU region, not in the US. We are diligently working behind the scenes to bring this service to the US region, too.

OpenAI Whisper STT

OpenAI Whisper STT enables very good speech recognition in many different languages. In our trials with German, though, it sometimes played fast and loose with translations — and by that, we mean it was occasionally more adventurous than we’d ideally like. We’re troubleshooting these instances of rogue creativity, but we’d rather spill the beans now than keep you away from this exciting innovation. OpenAI Whisper STT is now available in CVG as a further option for speech recognition.

The large number of supported languages (same list as for OpenAI TTS) enables a very wide range of applications.

OpenAI Whisper STT US-hosted only

Please note that we currently offer OpenAI Whisper STT exclusively hosted by OpenAI in the USA. We're exploring options to use the Azure hosting and potentially to hist Whsiper in our secure VIER environment, should the demand rise.

OpenAI Whisper STT not yet available in our US location

Due to some technical high jumps OpenAI Whisper STT, is currently available only in our EU region, not in the US. We are diligently working behind the scenes to bring this service to the US region, too.

We are very much looking forward to your feedback.

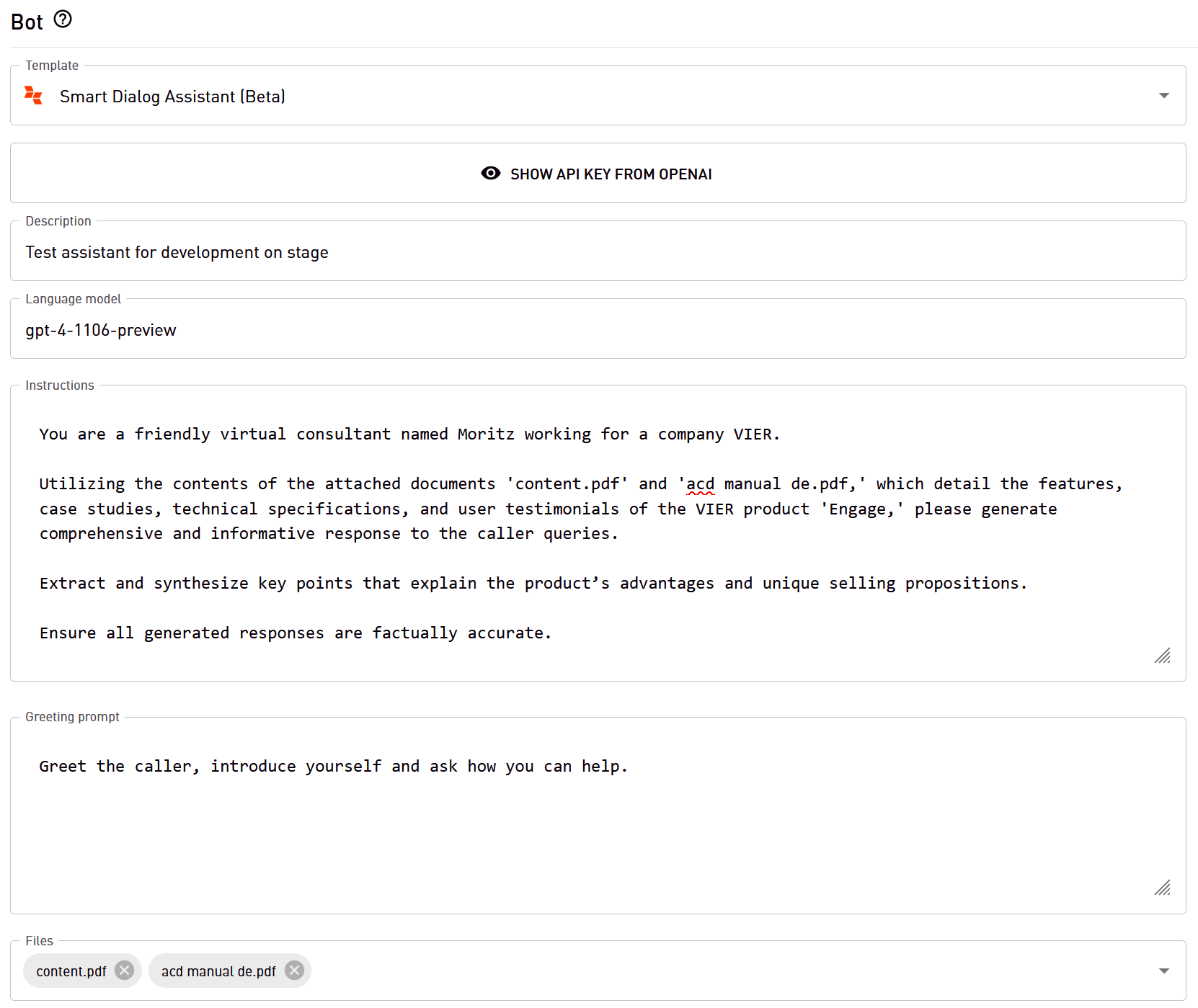

Smart Dialog Assistant (Beta) for Retrieval Augmented Generation

Retrieval Augmented Generation (RAG), is techniques fusion cuisine at its finest – combining the robustness of retrieval-based systems with the creativity of generative ones. The result? An artificial assistant that not only retrieves relevant context from your data universe, but also generates responses that are as accurate as they are brilliant, making your business operations as sharp as a tack.

The assisting hand behind this is none other than OpenAI’s Assistants API, which is still finding its feet in beta version. But fret not, because as every scout knows, you’ve got to be prepared early. And so, we’re giving you access to experience its charm, ahead of the pack.

The newly crafted “Smart Dialog Assistant (Beta)” bot template now allows you to harness RAG’s combined might, provided by the ChatGPT Assistants. To get this magic show on the road, documents showcasing your knowledge (your ‘information superstars’) need to be uploaded via OpenAI’s UI. Soon, you’ll also be able to upload these treasures directly through CVG. Once uploaded, these documents are ready for their time in the limelight, acting as input to guide your ChatGPT Assistant to great answers.

Beta version

This feature is beta. During the beta phase, changes can be significantly varied and frequent. These can range from simple tweaks to major overhauls based on the feedback received from beta testers. Changes might be made in terms of functionality, user interface, performance, reliability, or even security of the software.

Now, before you gear up to be swept off your feet by instant conversions, let us pull the brakes a little. The current response times for the RAG feature by OpenAI can range between 5 and 50 seconds. This variability should be acknowledged in your customized “Audio file for confirming inputs” - think of it as your personal, on-hold elevator music. For the perfect balance, we recommend at least 50 seconds of audio to ensure customers aren’t left hanging in uncomfortable silence.

However, the good news is, we’re crossing our fingers for OpenAI to break new speed records soon. We’re confident that significant improvements will be seen with the hosting by Azure.

Please contact us (mailto:support@vier.ai) if you would like to arrange a demo appointment for our Smart Dialog Assistant.

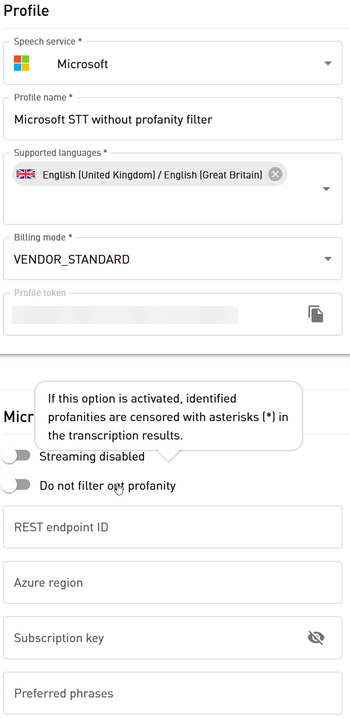

Speech-to-Text results with(out) Profanity Filter

In a valiant attempt at decency, our Speech-to-Text profiles have previously featured an automatic profanity filter to hide any wild west language.

As it so happens, some of your intriguing use cases necessitate not only the acknowledgment of these linguistic baddies but the precision to transmit them in clear text. Whether C-3PO was a feisty talker after hours or R2-D2 was fluent in sailor-speak, we won’t judge - we’re just here to enhance your tech.

We’ve responded to your unique needs. By configuring speech service profiles with a disabled profanity filter, you’ll ensure that your bots are now getting the “full spectrum” of data to analyze (Cheeky! But remember, all in the spirit of data integrity). You have the ability to configure for static STT profiles via UI and dynamic STT profiles through API.

Don’t worry, your software’s delicate sensibilities won’t be shocked out of the blue — the profanity filter will continue to be active by default. However, for any project or client who craves a little more color in their data, simply create a respective profile for the project or customer.

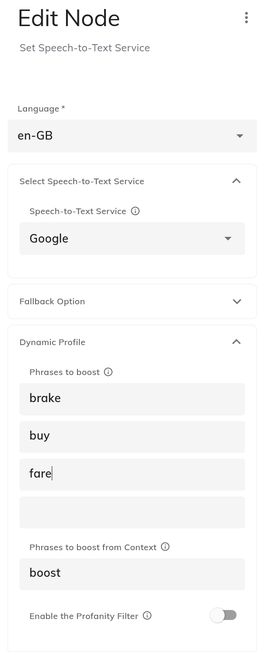

Furthermore, our VIER Voice Extension for Cognigy has been extended accordingly. You’ll find the new setting for toggling (De-)Activation of the Profanity Filter in the “Set Speech-to-Text Service” node.

Profanity filter (de)activation for Google, IBM, Microsoft

The activation/deactivation of the profanity filter is only available for speech-to-text services from Google, IBM and Microsoft.

Deactivation of profanity filter not yet available in our US location

Deactivation of the profanity filter is currently available only in our EU region, not in the US. Coming soon.

Better Speech-to-Text Control via VIER Voice Extensions for Cognigy

Good speech-to-text (STT) transcription results are an important prerequisite for good voicebots. That’s why the CVG API has recently added support for transcription hints and a switch to (de)activate the profanity filter has been added with this version. Now these two nifty STT control features are being introduced to our VIER Voice Extensions for Cognigy. Therefore the “Set Speech-to-Text Service” node has been cleaned up and extended.

Hint STT with Phrases

Want your voicebot to prefer specific vocabularies? Buckle up because we’re about to give you control of subtlety and nuance! The rearranged and refurbished “Set Speech-to-Text Service” node now allows cues to be threaded into the speech-to-text service in the form of hints. It’s charmingly simple. Just select Google or Microsoft as the STT service — only these STT services support hints — and then enter the words and phrases you’d prefer.

Hints for optimized speech recognition are just hints

Do not expect that the words specified in the phrase list will always be recognized, it will only increase the probability.

Do not expect that you can teach the STT unlimited new words by providing phrase lists. To some extent, new vocabulary such as certain product names may well be recognized much better via such phrase lists. However especially for special pronunciation, we still recommend the use of customized speech models.

(De-) Activation of Profanity Filter

For those who’ve been turning the air blue with frustration over the profanity filter, we’ve heard you loud and clear. The updated “Set Speech-to-Text Service” node now allows you to easily flip the (de)activation switch on the profanity filter. Now ain’t that [beep]-ing great?

Various Improvements within the CVG Integration for Rasa

Improvements within the CVG Integration for Rasa aim to optimize system performance and reliability for an even better user experience.

Limited operation delay: A delay of 50ms has been implemented to ensure individual operations do not supersede each other. This measure optimizes process flow and enhances overall system efficiency.

Retention of background task references: We’ve made a significant change to retain references to background tasks. This prevents their unintentional deletion, thereby maintaining relevant context for system functionality.

Asynchronous operations on call forwarding: The operations when forwarding calls (via /call/forward and /call/refer) are now asynchronous, and are designed to handle tasks that may take a longer time to process. This adjustment improves performance and stability during call forwarding tasks.

These updates are part of our continued commitment to providing a robust and efficient system for our valued enterprise customers. We encourage you as a user of Rasa or botario to start taking advantage of these improvements immediately.

Upgrade to 1.3.3 now

If you are using our VIER Cognitive Voice Gateway integration for Rasa within Rasa or botario, we strongly recommend upgrading to version 1.3.3 which has already been battle-tested by some early adaptors. The improvements included are essential to ensure stable operation. If you are using botario, we also recommend upgrading to botario version 7.2.x.

If you have any questions concerning our integration with Rasa or botario, please don’t hesitate to contact our support team.

Security: Improved Bot Authentication Process

Following our previous announcement and initial steps in the earlier December release, we are continuing our efforts to streamline the authentication process for bots interfacing with the CVG API. By the end of this year, authentication will become mandatory for all bot interactions to enhance security.

authToken Parameter in all Bot API Endpoints

With this release, we have further simplified the authentication process by including the authToken parameter in all outgoing endpoint requests from CVG to your bot. This applies to the previously established /bot/session endpoint and now extends to all other relevant endpoints within the CVG’s Bot API.

The authToken is now consistently provided in each Bot API endpoint call to ensure seamless integration without affecting the statelessness that is essential for some bot operations.

Documentation Updates

New documentation has been crafted to aid with the adoption of the updated authentication process. This concise guide offers insights into the rationale behind bot authentication requirements as well as instructions to ensure correct implementation using CVG’s APIs.

Your Attention required

We appreciate your attention to these updates as we move toward a more secure and robust integration.

Your Attention required

Please make sure to review the documentation and update your custom bots accordingly to comply with the new security standards before the enforcement deadline at the end of the year. Your cooperation is vital in maintaining the security and integrity of our shared ecosystem.

Bots using any of the ready-made integrations built by us or our partners will use authentication without requiring any change to existing bot designs.

For any questions or concerns, please reach out to our support team, who happy to assist you through this transition.

Improvements of ChatGPT Integration

Adjustable Speech Speed for TTS in ChatGPT Bots, Smart Dialog and Smart Dialog Assistant

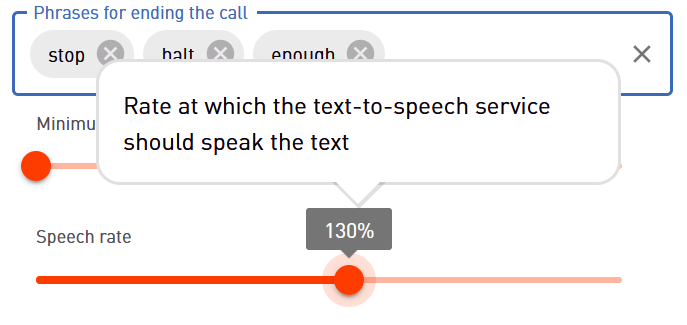

Do you find that the majority of Text-to-Speech (TTS) voices could talk a bit faster? Well, we’ve got good news! You can now easily adjust the speech speed of a ChatGPT-based bot in its configuration settings.

There is a minimum adjustable value of 50%, translating into the selected TTS voice speaking half as fast as the default setting. On the flip side, a top-notch setting of 200% means the voice will speed-talk twice as fast as the default — perfect for when your customers are in the mood for a little adrenaline rush! The actual speech speed, however, depends on the TTS voice you choose.

TTS Voice needs to support SSML

Please note that when adjusting the speech speed, this is only possible with TTS voices that support Speech Synthesis Markup Language (SSML). This applies to voices made available through CVG from IBM, Google, Microsoft and Nuance. In an irony of Ironies, OpenAIs own TTS voices do not support SSML — yet. However, their default speech speed tends to be significantly chipper and faster.

Faster is not always better, balance is the key. Set the speed that your bot’s target audience finds comfortable and efficient to ensure optimal communication without unwanted hecticness.

Configurable Prompts for Function Calls

Function calls can be used for call control in ChatGPT and Smart Dialog bots instead of having ChatGPT generate JSON. New input fields allow you as a bot designer to tell ChatGPT what to say before a function call is executed (call is forwarded or terminated, custom data is saved). Your bots are now equipped to handle transitions with the grace of a British butler. With these new input fields, you can ensure they never make an awkward exit. Whether you need them to say a polite “farewell” or a warm “catch you later” before handing over control or saving custom data, we’ve got you covered.

If you’d rather keep things straightforward, leave the fields blank, and our ChatGPT integration will use a default prompt that’s courteous enough not to spill any virtual tea.

Smaller Improvements and Fixes

External CallId for Dialogs initiated by Outbound Calls

You can now assign an external callId to dialogs initiated by outbound calls using the /call/dial endpoint. This new feature enhances call tracking capabilities by giving you the flexibility to set custom identifiers, streamlining your interaction management process.

More importantly, this enhancement allows you to retrieve dialog data that corresponds to these specified external callIds. This simplifies or even enables data extraction for downstream systems.

Display Fallback STT/TTS Service if it was used

If the primary STT or TTS service was not available — although this rarely happens, even with the integrated hyperscalers Amazon, IBM, Google and Microsoft — the fallback STT/TTS service used instead is now displayed in the dialog data.